The tech we use matters: Why we support AI procurement standards (SB 892) in California and beyond

Governments across the country are using AI to determine everything from who gets unemployment to who is investigated by Child Protective Services. In California, the Department of Transportation recently awarded its first generative AI contract in the state’s history, aiming to improve safety on roadways.

These consequential decisions are being made by biased and usually opaque algorithms.

Without the state setting stringent procurement standards for the technology it uses to serve the people of this state, we risk deploying technologies that can discriminate and deny access at scale. That’s why, in alignment with our AI Policy Principles, we support SB 892 in California.

SB 892 requires the Department of Technology to develop and adopt regulations to create an artificial intelligence risk management standard. The standard must also include a detailed risk assessment procedure for procuring automated decision systems (ADS).

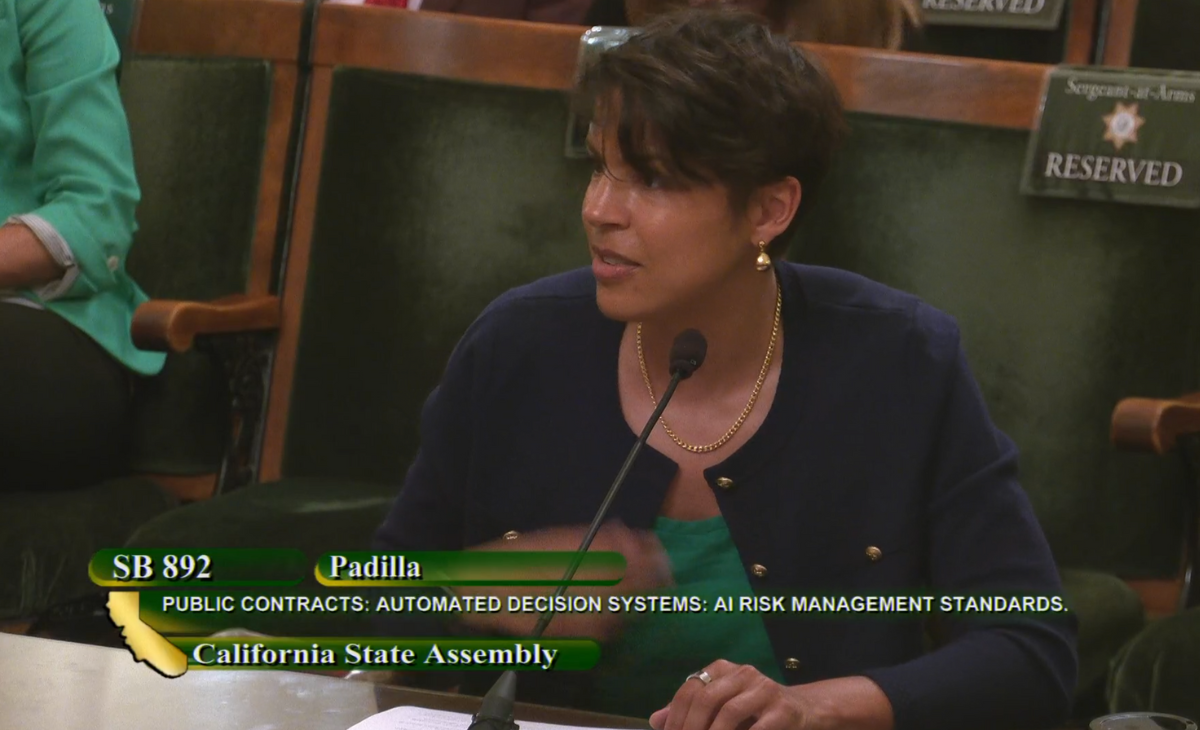

Check out the testimony our Founder and CEO Catherine Bracy gave tof the California Assembly Privacy Committee to learn more about the bill and why we’re in support:

From left to right: TechEquity Founder and CEO Catherine Bracy, California Senator Alex Padilla, and Daniel Zhang, Senior Manager for Policy Initiatives at Stanford Institute for Human-Centered Artificial Intelligence (HAI).

Catherine Bracy’s Testimony

We are proud to support SB 892 to ensure that as California considers the adoption of AI systems, we establish common-sense guardrails around the use of this technology.

The state government is rightly focused on reducing backlogs and improving services for our communities. Technology can be a useful and important tool to achieve those efficiencies. But we also think it’s important to learn from several examples where the rapid adoption of technology—often without serious input from public sector workers and communities—created massive delays, wrongful denials, and problems that negatively impacted constituents seeking services, leaving taxpayers to bear the brunt of fixing costly mistakes.

During the pandemic, millions struggled to receive unemployment insurance in a timely fashion. In February 2022, a Legislative Analyst’s Office (LAO) report outlined that hundreds of thousands of workers’ claims were wrongfully denied due to an algorithm.

- That algorithm reviewed almost 10 million claims and identified 1.1 million of them as fraudulent.

- EDD stopped payments on those claims without notifying applicants.

- However, more than half of the claims denied by the algorithm were later confirmed to be legitimate.

- This experience was not isolated to California. This same algorithm has been sold to 42 states and is now the subject of a complaint filed at the Federal Trade Commission.

Without the guardrails SB 892 provides, California may be taking a step backward in the quality of service delivery when using AI. What distinguishes the public sector from the private actors who develop and sell this technology is that the public sector is responsible for providing services to everyone—and ensuring that those services are safe and fair. That is why it is common to have specific and heightened procurement standards for public contracts.

People count on the state in some of the most difficult moments in their lives. The public’s trust in the government’s ability to deliver these critical services has never been more important.

SB 892 recognizes this reality and recognizes California’s ability to set a world-leading standard for the use of AI systems and emerging technology in high-risk environments.

—

Check out our full 2024 legislative agenda here and learn more about the bills we’re supporting.