We need to address algorithmic bias: Why we support AB 2930 in California

Algorithms are increasingly determining things like where we live, how we work, and who gets arrested. These are highly consequential decisions that are being automated at a massive scale. But these algorithms have bias hard-coded into them—from the code itself to the data these algorithms are fed.

AB 2930 aims to remove bias from Automated Decision-Making Tools (ADTs) by requiring developers and users of ADTs to conduct and record an impact assessment, including the intended use, the makeup of the data, and the rigor of the statistical analysis. The data reported must also include an analysis of potential adverse impact on the basis of race, color, ethnicity, sex, or any other classification protected by state law.

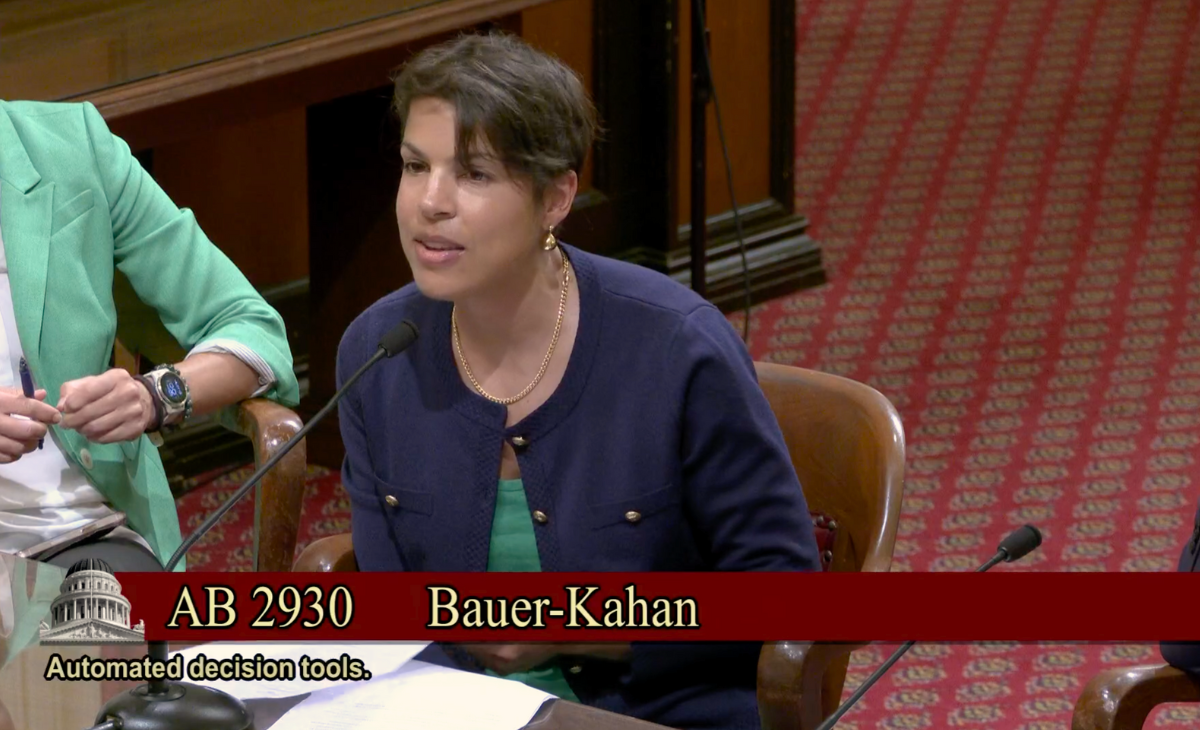

The bill’s scope is broad and would regulate ADTs of all kinds, including those used to make hiring and other workplace-related decisions, as well as ADTs deployed in housing like tenant-screening tools. Check out the testimony our Founder and CEO Catherine Bracy gave to the California Senate Judiciary Committee to learn more about the bill and why we’re in support:

Catherine Bracy’s Testimony

We are proud to support AB 2930, which rightly aims to end discrimination through automated decision-making systems. AB 2930 would enact common-sense guardrails that would:

- Require developers and deployers to conduct impact assessments to test for discrimination before the tool is brought to market;

- Prohibit the sale or use of an ADT that may create a discriminatory outcome until that adverse impact has been addressed and resolved;

- Provide consumers with notice before the tool is used, an explanation of the outcome after its use, and the right to correct inaccurate information.

Enacting the protections outlined in AB2930 is critical as we have already seen the harmful and discriminatory effects that automated decision-making systems can have on our communities. Everything from tools that were meant to predict how likely a defendant is to recidivate was found to falsely flag black defendants as future criminals at twice the rate of white defendants, to systems that predictively score rental housing applicants, a la Minority Report, on whether they are likely to pay rent on time.

TechEquity’s research has found that these predictive scoring tools, which make guesses about peoples’ future behavior based on comparisons to others who are “like” them, are being used by almost twenty percent of landlords in California—and that number is growing. Our research has also found that these tools are disproportionately relied upon by landlords who rent to more vulnerable people.

These tools often operate as black boxes that offer no way for impacted people to know, correct, or dispute the results or understand the logic behind a recommendation or prediction of an algorithm. AB 2930 will provide consumers and workers with more information and greater transparency into the use of these tools for critical areas of their lives—while requiring developers and deployers to take more responsibility for reducing the likelihood of discrimination.

We appreciate Assemblymember Bauer-Kahan’s leadership on this important issue and her willingness to work with various stakeholders to ensure that this legislation provides meaningful protections to those impacted by automated decision tools (ADTs).

—

Check out our full 2024 legislative agenda here and learn more about the bills we’re supporting in the California legislature.