Algorithms are making life-changing decisions about you—but how do they work?

In 2022, Gene Lokken fell at home and fractured his leg and ankle, a common story for a 91-year-old. After a little over a month, he was well enough to go to physical therapy. The only problem: his insurance company only paid for 19 days of therapy, a shock to his doctors who described his muscles as “paralyzed and weak.”

His family appealed the decision, but the insurance company rejected it. He has no acute medical issues, they wrote, and can feed himself. They had no choice. By the time Gene passed away, they had spent approximately $150,000 on his treatment.

This is also an increasingly common story, especially in the age of automated decision systems.

Across the country, people are being denied healthcare, housing, loans, jobs, benefits, and more by companies and governments using automated decision systems (ADS). Chances are that every day, your life is touched, shaped by an ADS.

This technology only becomes more and more pervasive every day, but most of us know very little about how it’s being used and shaping critical decisions about our lives. To best understand what we can do about this, we need to start by understanding what an ADS is in the first place.

What is an Automated Decision System?

Broadly, Automated Decision Systems (ADS)—also known as Automated Decision Tool (ADT) or Automated Decision-Making Tools (ADMT)—are tools that take data and put it through an algorithm, or what we may have called in the past, a formula or a set of rules. Then, based on how that data stacks up against the rules or formula (or information that the algorithm “inferred” from the data), the system generates a recommendation, a score, a prediction, or a decision.

ADS are used in lots of ways and show up in various parts of our lives, like:

- To automatically adjust airfare prices based on what an individual is potentially willing to pay

- To assess employee performance, especially if an organization is planning to do layoffs

- To determine whether defendants should be detained or released on bail pending trial based on “predictions” of how they’ll behave

Why use these tools instead of relying on human decision-makers?

Why do people use ADS?

People, companies, and governments use Automated Decision Systems for a variety of reasons.

Organizations intend to use ADS to increase efficiency, meaning that a decision that was once potentially an assessment and review conducted one-on-one by humans can now be conducted by a machine and impact thousands of people. Many organizations believe that they can use ADS to replace human decision-makers, saving money on staffing. Everything is simpler, right?

The problem with using ADS is that these systems aren’t making decision-making simple; rather, they’re just simplifying the process. These systems take a three-dimensional person/situation and flatten them/it to make potentially life-changing decisions.

Edith Ramirez Former Chairwoman of the Federal Trade Commission (FTC)Individuals may be judged not because of what they’ve done, or what they will do in the future, but because inferences or correlations drawn by algorithms suggest they may behave in ways that make them poor credit or insurance risks, unsuitable candidates for employment or admission to schools or other institutions, or unlikely to carry out certain functions.

In the quest to simplify decision-making and/or cut costs, companies and governments can use ADS in ways that negatively impact everyday people.

In Pennsylvania, the social workers use an ADS called the Allegheny Family Screening tool, which provides recommendations to inform them which families should be investigated for neglect.

The idea: to provide social workers with more support and help children get the interventions they need. The execution: the ADS was found to have racial bias and bias against families with disabled parents or children.

Nevertheless, welfare agencies in at least 26 states and Washington, D.C., have considered using algorithmic tools, and at least 11 have deployed them.

How are ADS developed?

How do Automated Decision Systems get that way? You don’t need to have technical expertise to understand how these systems are made—and the impact of how they’re made.

ADS is an AI system and often overlaps with what many think of as AI. They typically fall into three main categories:

- Rule-based systems: Hard-coded with “rules” – e.g. subtract 10 points from a job application if they don’t have over 5 years of experience

- Machine Learning systems: Trained on previous data – e.g. rank job applicants based on how similar they are to high-performing employees

- Generative AI systems/Large Language Models (LLMs): Trained on massive datasets to recognize patterns and create new content or emulate human decision-making – e.g. generate assessments of candidates based on their resumes and give them a score accordingly

Guided by these rules, an ADS generates an outcome, which can be a score or a recommendation like “reject” or “accept.” The problem is that each of the “rules” that an ADS uses to generate an output introduces potential flaws and bias.

For instance, researchers at the University of Washington tested three open-source LLMs and found they favored resumes from white-associated names 85% of the time. Over the 3 million job, race, and gender combinations tested, they found that the models preferred other candidates over Black men nearly 100% of the time.

Why? It’s that adage that you are what you eat. These LLMs were trained on a history of racial discrimination, so that discrimination was baked into their recommendation.

Because the whole purpose of these systems is to automate processes, it’s rare that people catch these biases and interrogate them before harm is already done.

What are ADS using to make decisions?

At some point in developing an ADS, a company decides that it’s ready for use. Data is fed into the algorithm, which interprets and analyzes the data it’s fed based on the rules established during development.

Companies, governments, or thousands of organizations are collecting data on you from forms you submit, data brokers, other sources unknown to the public, etc. This data is sometimes inaccurate, missing elements, and/or is data that people didn’t consent to being collected, including someone’s precise physical location.

In 2019, The New York Times analyzed a file from a company within the data industry and discovered that it contained the everyday location information for over 12 million Americans over the span of two years. This company isn’t even considered to be ‘Big Tech’.

In some instances, when the algorithm is analyzing data based on its programmed rules, it also infers additional information about a person based on given data points, like inferring someone’s race from their zip code. This can lead to automated discrimination, hidden behind a veneer of algorithmic objectivity.

All of these issues can go unchecked because of a lack of transparency around how data is gathered and used.

What are the potential harmful outcomes of ADS?

Sometimes the data and/or the outputs of an Automated Decision System are flat-out inaccurate, causing harm that’s caught all-too-late, if at all. Humans are also prone to mistakes and acts of discrimination. But the use of ADS threatens to scale up that discrimination with a speed and scale that is unprecedented.

Like in Michigan, where over 40,000 people were stripped of unemployment benefits due to an ADS labeling their claims as fraudulent. It took 8 years just for 3,000 of them to receive payouts. What happened to these people and their families in the meantime?

This is one of the reasons why decisions made or influenced by an ADS must come with explanations on the data used (and its source), the rationale behind the decision, and how that data factored into the decision-making.

Tarek R. Besold and Sara L. Uckelman Authors of “The What, the Why, and the How of Artificial Explanations in Automated Decision-Making”When someone asks for an explanation of why they have been denied a loan by the bank, responding “the algorithm outputted a ‘no’ to your request”[…] cannot be an explanation of why the person was denied; it isn’t an explanation any more than “Because I said so” is an explanation to a child of why they cannot have a second piece of candy.

With explanations, ADS users can make truly informed final decisions. Those being assessed by an ADS can correct inaccuracies and/or advocate for their rights if they suspect a faulty or discriminatory output. And the company that made the ADS can build trust with its user base.

What can we do about it?

Too often, people flick on autopilot and walk away from the wheel, leaving these Automated Decision Systems to steer decisions without oversight, regardless of the risk they pose to the people in their paths. However, there are measures we can take to mitigate the harmful impacts of ADS.

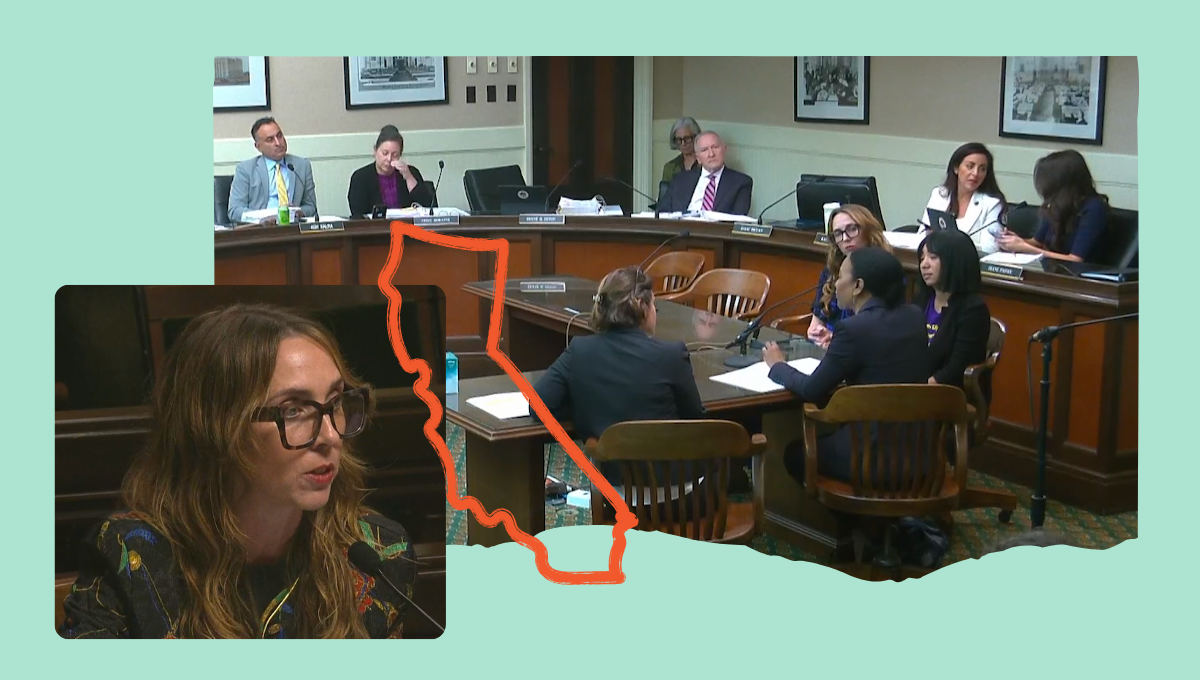

In California, we’re sponsoring the Automated Decision Safety Act (AB 1018) to protect everyday people against automated discrimination.

AB 1018 would ensure that tech companies follow common-sense blueprints to prevent discrimination and provide clear explanations to the public on how they use these tools. That way, Californians can trust that these life-changing decisions aren’t being made lightly.

We’re also supporting other bills this year that address the use of ADS in housing, the workplace, and ensuring tech isn’t used to drive up prices for everyday essentials.

How can you help?

We know that Gene Lokken and his family aren’t the only people who have been negatively impacted by Automated Decision Systems.

Have you been on the receiving end of an AI-driven decision that you believe was biased or discriminatory? We want to hear your story. Fill out the form below, and one of our organizers will follow up with you shortly.

Share your story about automated decisions

Have you been on the receiving end of an AI-driven decision that you believe was biased or discriminatory? We want to hear your story.

"*" indicates required fields