We must ensure that automated decision systems are safe

Applying to rent an apartment? Frustrated that your insurance won’t cover an X-ray? Maybe you were recently laid off and you’re filing for unemployment benefits while also applying for new jobs.

These scenarios may seem random, but they have one key thing in common: companies and governments are increasingly using automated decision systems (ADS) to make decisions in every case.

The problem is that there is little regulatory oversight and transparency when it comes to these systems, despite their use in making consequential decisions and mounting evidence that they can produce flawed and/or biased results.

Most people don’t even know that these systems are being used on them. Even when they do, what recourse do they have to correct mistakes and challenge biases?

We need to bring protections for Californians up to speed with today’s technologies and their impact on our lives. The Automated Decision Safety Act (AB 1018) will do just that.

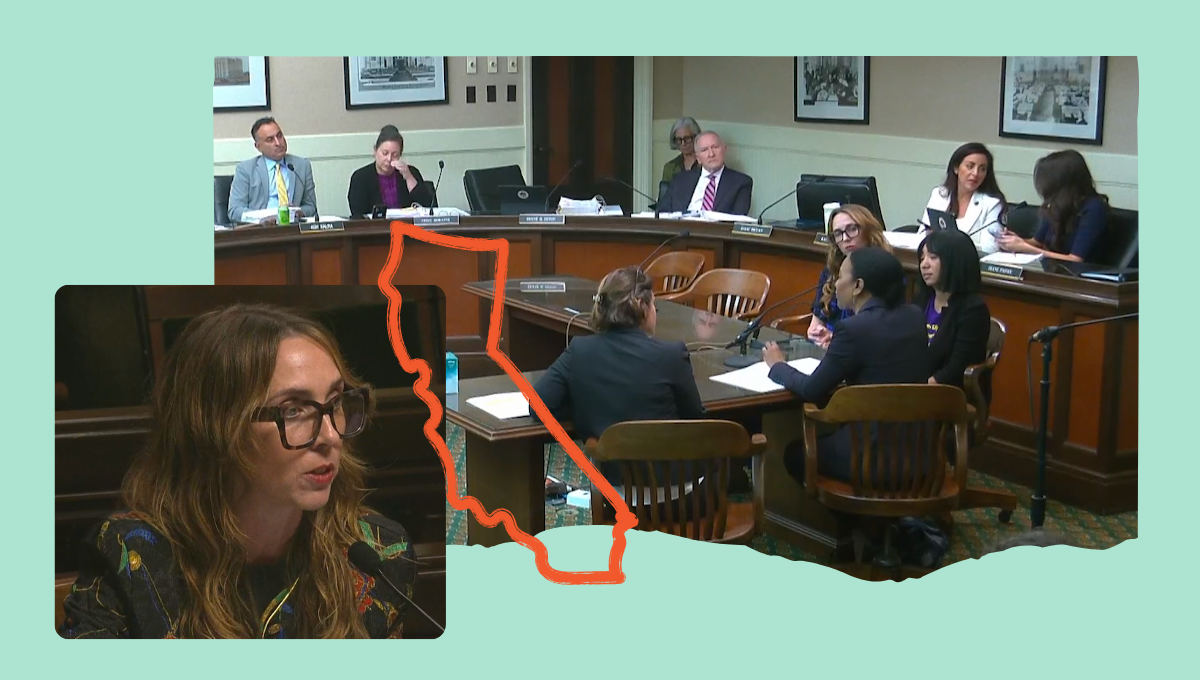

That’s why Former Commissioner of the U.S. Equal Employment Opportunity Commission, Charlotte Burrows, and our Chief Program Officer, Samantha Gordon, testified in favor of AB 1018 in front of the California State Assembly earlier this year. This is what they had to say:

Charlotte Burrows’s Testimony

Assembly Judiciary Committee | April 29, 2025

Good morning, Chair Kalra and members of the Committee. I’m honored to share my perspective on employment discrimination that may arise from automated decision systems. My views today are based on my more than two decades’ experience in civil rights enforcement, litigation, and policy and do not represent the position of any entity with which I am affiliated.

California has long led in protecting workers from discrimination based on race, national origin, religion, sex, disability, age, and other characteristics that are irrelevant to job performance. To ensure those protections remain a reality for workers, laws must effectively address discrimination in all its forms, including when it results from automated decision systems.

Such systems are increasingly used in employment decisions, from hiring to firing and nearly everything in between. Although some systems may offer benefits, like other powerful technologies, they require sensible guardrails to ensure their benefits outweigh potential harms.

The urgent need to address these harms led me to launch an initiative on artificial intelligence when I served as Chair of the U.S. Equal Employment Opportunity Commission. These harms include:

- Online assessments without accommodations for candidates with limited vision or disabilities that made it difficult to use a keyboard or mouse;

- [Selection systems trained on biased or unrepresentative data that excludes many qualified workers]

- Employee monitoring systems that set schedules so rigid that it’s impossible for employees to take breaks needed due to pregnancy, disability, or religious reasons; and

- Systems using illegal metrics, as in EEOC’s case against iTutor, involving an online recruitment system that automatically rejected older applicants based on their birth dates.

AB 1018 contains important guardrails when employment-related harms make high-stakes, consequential decisions about workers’ lives. It provides transparency that allows employees to challenge potentially discriminatory decisions and know when to request needed accommodations. Finally, it helps ensure employment decisions are based on merit, not inaccurate or discriminatory data, and that’s in everyone’s interests.

Thank you, and I look forward to your questions.

Samantha Gordon’s Testimony

Assembly Privacy and Consumer Protection Committee | April 22, 2025

Good afternoon, Assemblymember Dixon and members of the committee. Thank you for letting me speak today. My name is Samantha Gordon, and I’m Chief Program Officer at TechEquity. We’re a research and policy advocacy organization focused on ensuring tech is responsible for building prosperity for all and is held accountable for the harms it creates in our communities.

We are proud to sponsor AB 1018, which ensures Californians are protected from discrimination and error when automated decision systems (ADS) are used to make life-altering decisions, like whether someone’s medical bills are covered, or if they’re denied housing or a job.

We’ve seen real-life examples of algorithms that are unreliable and inaccurate because they rely on bad data or biased assumptions to make decisions. For example, a tenant screening system that denied people housing because they used public assistance, or a patient care tool that gave worse care recommendations to Black patients because it used healthcare spending as a proxy for health. Research from The Markup found that home lending algorithms in California were nearly twice as likely to deny a Black loan applicant compared to a white one, even when controlling for income, debt, and other financial characteristics.

AB 1018 is carefully scoped to address these types of decision-making systems, ones that contribute materially to important decisions like access to housing, jobs, education, and public services. It focuses on technologies that use large datasets and techniques like machine learning and statistical modeling to issue scores and recommendations. AB 1018 does not capture low-risk systems like calendaring and appointment software. For the consequential systems that are covered by this bill, it does the following:

- First, it requires developers [and deployers] to test these tools to ensure they’re accurate and don’t discriminate before they are sold and used on the public. It also ensures that these tests are verified by an independent third party.

- Second, it mandates clear notice to people when these tools are used to make critical decisions about their lives.

- Third, it provides an explanation of how these tools arrived at a decision, including what personal information they used, what factors the system considered, and what role the tool played in the outcome.

- And fourth, it gives people the right to opt out of the use of an ADS tool in a critical decision about them; they will be able to correct information that the tool used to make the decision if it is inaccurate; and they will have the right to appeal the decision.

ADS tools often operate as black boxes, denying individuals any ability to understand, correct, or dispute the decisions that profoundly impact their lives. AB 1018 is a common-sense bill to bring transparency, fairness, and safety into today’s tech landscape. Finally, the bill’s emphasis on transparency, risk assessment, and addressing adverse impacts reflects key recommendations from the Governor’s task force report on AI, and is an opportunity for California to remain a national leader in tech accountability.

We appreciate Assemblymember Bauer-Kahan’s leadership on this important issue and her willingness to work with various stakeholders to ensure that this legislation provides meaningful protections to those impacted by automated decision systems. We look forward to continuing to work with Assemblymember Bauer-Kahan on the bill and ask for your aye vote.

Now, your testimony

Have you seen examples of automated decision systems in your life? Do you think you were discriminated against or otherwise unfairly treated because of them? We want to hear your story. By sharing your experience, you help lawmakers and the public understand what changes need to happen to ensure a better tech economy for everyone.

Share your story about automated decisions

Have you been on the receiving end of an AI-driven decision that you believe was biased or discriminatory? We want to hear your story.

"*" indicates required fields